SVM Regression

SVM Regression#

SVM(Support Vector Machine)은 머신러닝 분야에서 우수한 알고리즘 중에 하나로 데이터 형태에 맞는 Kernel 함수 및 Regularization를 선택하여 적용함으로써, 선형/비선형 데이터셋 및 분류/회귀 문제 모두에 사용할수 있습니다.

# 경고 메시지 출력 끄기

import warnings

warnings.filterwarnings(action='ignore')

%matplotlib inline

import matplotlib.pyplot as plt

import IPython

import sys

rseed = 22

import random

random.seed(rseed)

import numpy as np

np.random.seed(rseed)

np.set_printoptions(precision=3)

np.set_printoptions(formatter={'float_kind': "{:.3f}".format})

import pandas as pd

pd.set_option('display.max_rows', None)

pd.set_option('display.max_columns', None)

pd.set_option('display.max_colwidth', None)

pd.options.display.float_format = '{:,.5f}'.format

import sklearn

print(f"python ver={sys.version}")

print(f"pandas ver={pd.__version__}")

print(f"numpy ver={np.__version__}")

print(f"sklearn ver={sklearn.__version__}")

python ver=3.8.9 (default, Jun 12 2021, 23:47:44)

[Clang 12.0.5 (clang-1205.0.22.9)]

pandas ver=1.2.4

numpy ver=1.19.5

sklearn ver=0.24.2

#참조: https://scikit-learn.org/stable/auto_examples/svm/plot_svm_regression.html#sphx-glr-auto-examples-svm-plot-svm-regression-py

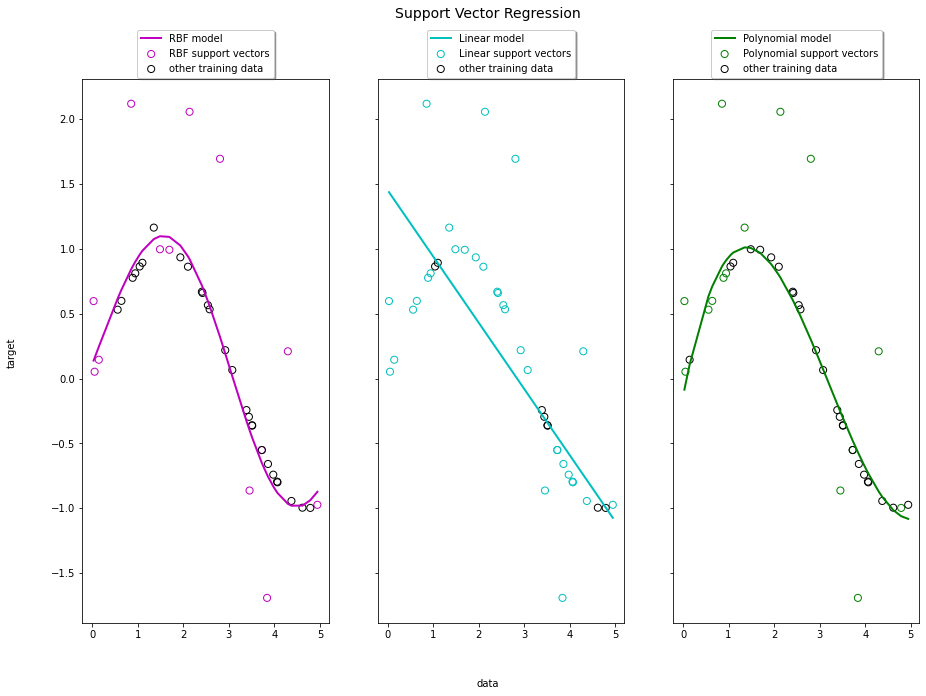

from sklearn import svm

# #############################################################################

# Generate sample data

X = np.sort(5 * np.random.rand(40, 1), axis=0)

y = np.sin(X).ravel()

# #############################################################################

# Add noise to targets

y[::5] += 3 * (0.5 - np.random.rand(8))

# #############################################################################

# Fit regression model

svr_rbf = svm.SVR(kernel='rbf', C=100, gamma=0.1, epsilon=.1)

svr_lin = svm.SVR(kernel='linear', C=100, gamma='auto')

svr_poly = svm.SVR(kernel='poly', C=100, gamma='auto', degree=3, epsilon=.1, coef0=1)

y_rbf = svr_rbf.fit(X, y).predict(X)

y_lin = svr_lin.fit(X, y).predict(X)

y_poly = svr_poly.fit(X, y).predict(X)

# #############################################################################

# Look at the results

lw = 2

svrs = [svr_rbf, svr_lin, svr_poly]

kernel_label = ['RBF', 'Linear', 'Polynomial']

model_color = ['m', 'c', 'g']

fig, axes = plt.subplots(nrows=1, ncols=3, figsize=(15, 10), sharey=True)

for ix, svr in enumerate(svrs):

axes[ix].plot(X, svr.fit(X, y).predict(X), color=model_color[ix], lw=lw,

label='{} model'.format(kernel_label[ix]))

axes[ix].scatter(X[svr.support_], y[svr.support_], facecolor="none",

edgecolor=model_color[ix], s=50,

label='{} support vectors'.format(kernel_label[ix]))

axes[ix].scatter(X[np.setdiff1d(np.arange(len(X)), svr.support_)],

y[np.setdiff1d(np.arange(len(X)), svr.support_)],

facecolor="none", edgecolor="k", s=50,

label='other training data')

axes[ix].legend(loc='upper center', bbox_to_anchor=(0.5, 1.1),

ncol=1, fancybox=True, shadow=True)

fig.text(0.5, 0.04, 'data', ha='center', va='center')

fig.text(0.06, 0.5, 'target', ha='center', va='center', rotation='vertical')

fig.suptitle("Support Vector Regression", fontsize=14)

plt.show()

from sklearn import datasets, preprocessing, model_selection, svm, metrics

# 데이터

n_samples = 1000

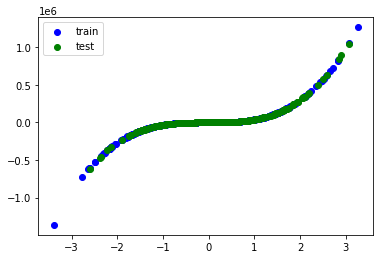

xs, ys = datasets.make_regression(

n_samples=n_samples, # 데이터 수

n_features=1, # X feature 수

bias=1.0, # Y 절편

noise=0.3, # X 변수들에 더해지는 잡음의 표준 편차

random_state=rseed) # 난수 발생용 Seed 값

ys = ys**3 # Y 차원

print(f"data shape: xs={xs.shape}, ys={ys.shape}")

train_xs, test_xs, train_ys, test_ys = model_selection.train_test_split(

xs, ys, test_size=0.3, shuffle=True, random_state=2)

print(f"train shape: train_xs={train_xs.shape}, train_ys={train_ys.shape}")

print(f"test shape: test_xs={test_xs.shape}, test_ys={test_ys.shape}")

plt.scatter(train_xs, train_ys, label='train', c='b')

plt.scatter(test_xs, test_ys, label='test', c='g')

plt.legend()

plt.show()

# 모델

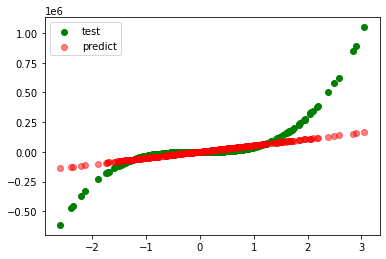

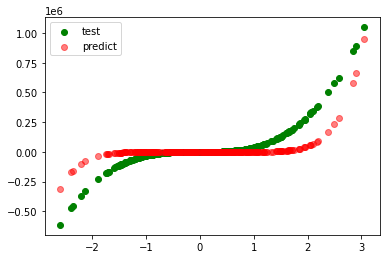

models = [

svm.SVR(kernel='linear', C=1000000, gamma='auto'),

svm.SVR(kernel="poly", C=10000000, gamma='auto', degree=7, epsilon=0.1),

svm.SVR(kernel='rbf', C=10000000, gamma='auto'),

]

for model in models:

# 학습

print(f"\nmodel={model}")

model.fit(train_xs, train_ys)

# 평가

pred_ys = model.predict(test_xs)

r_square = metrics.r2_score(test_ys, pred_ys)

print(f"r_square={r_square:.5f}")

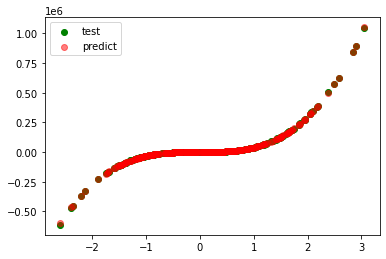

plt.scatter(test_xs, test_ys, label='test', c='g')

plt.scatter(test_xs, pred_ys, label='predict', c='r', alpha=0.5)

plt.legend()

plt.show()

data shape: xs=(1000, 1), ys=(1000,)

train shape: train_xs=(700, 1), train_ys=(700,)

test shape: test_xs=(300, 1), test_ys=(300,)

model=SVR(C=1000000, gamma='auto', kernel='linear')

r_square=0.44670

model=SVR(C=10000000, degree=7, gamma='auto', kernel='poly')

r_square=0.62338

model=SVR(C=10000000, gamma='auto')

r_square=0.99987